Announcing our Fall 2025 ETP Fellows!

And a digest of the latest news in ethical tech

We are thrilled to announce the ETP Fellows Program cohort for Fall 2025!

These Fellows bring a wealth of experience to the program. Their work spans fields like content and policy that makes the internet safer and more inclusive, flavor and fragranced powered by AI, and data science fused with anthropology.

Please join us in welcoming Audrey Kennedy, Eden Senay, Joshua Evans, Kiranmayee Suryadevara, Leah Ferentinos, Melissa Major, Michelle Dong, Rossy Esmil Araujo, Shoba Varma, Steven Chu, Tyler Celestin, William (Billy) Lynn, Xenia Masl, and Zhamilya Bilyalova.

In the coming weeks, they’ll be diving into our proprietary curriculum to understand the most pressing issues in ethical tech, and learning from some of the foremost leaders in the field.

And thank you to everyone who applied to the ETP Fellows Program. The selection process was highly competitive, and we’re working to expand our programming in the Spring of 2026.

We’re also celebrating three of our Spring ‘25 Fellows, who are using the lessons they learned in our program to transform the tech innovation ecosystem.

George Nunez is the founder of Bronx Tech Hub, a non-profit organization dedicated to building a thriving tech ecosystem that reflects the needs of Bronxites. Read more about his work in this article by The New York Amsterdam News: ‘George Nunez wants to change the narrative about The Bronx through tech’.

Bobby Zipp has founded a new company Firstep, which helps people plan for their future families in ways that match their beliefs and values - from co-parents, partners, or support networks. You can find out the latest on their work here.

Nara Valera-Simeon was selected as 1 of 70+ young leaders worldwide to explore how youth can shape the future of AI governance for ‘CTRL + Future: A Youth Forum on Responsible AI Summit’.

We can’t wait to see what our Fellows do next! To find out more about our Fellows and the ETP Fellows program, check out our website, and join us on LinkedIn.

Ever wish you could treat an ethical technologist to dinner?

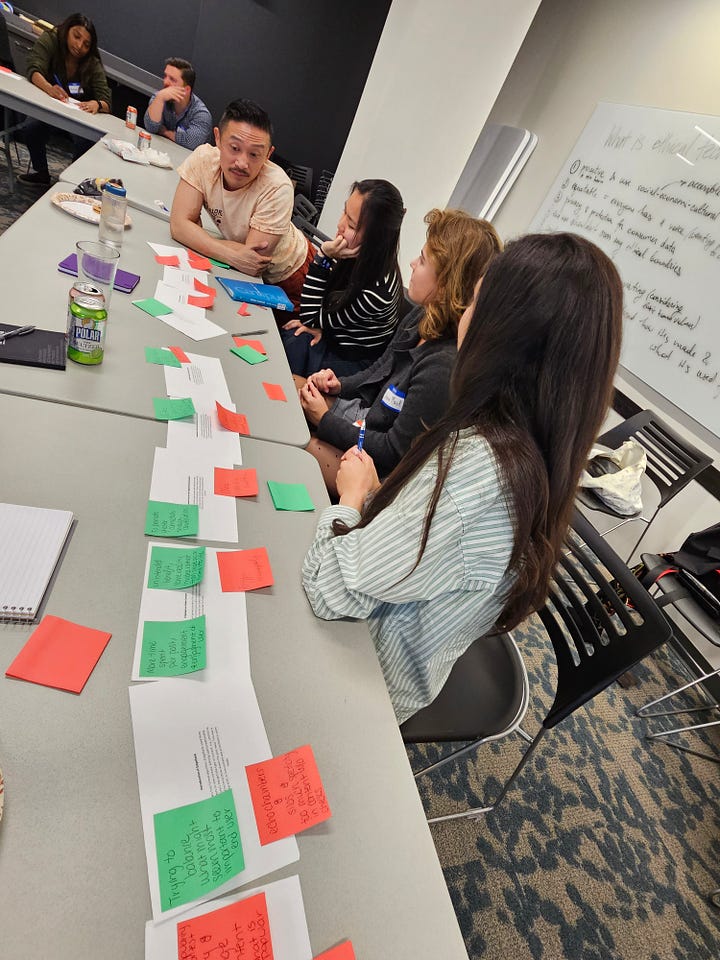

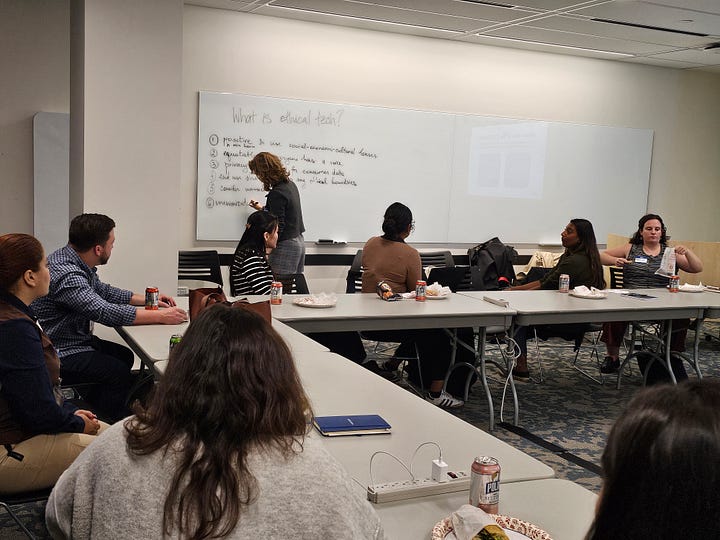

Us too! That’s why we serve sandwiches and snacks at all of our in-person training sessions. It’s more than just a meal - it’s a way for our Fellows to build community, and be ready to dig deep into the most pressing issues in ethical tech and responsible AI.

$25 is all we need to provide one meal, and even the smallest contributions help power our work.

With gratitude,

Jennie & Nancy

Here’s what we’ve been reading and listening to, and you should be too:

🙈 Privacy

The New York Times: We Are Tech Privacy Reporters. Our Music Habits Got Doxxed.

The “Panama Playlists” exposed the Spotify listening habits of some famous people — and two journalists who didn’t know as much about protecting their privacy as they had thought.

💸 Money

The New York Times: The A.I. Spending Frenzy Is Propping Up the Real Economy, Too

The trillions of dollars that tech companies are pouring into new data centers are starting to show up in economic growth. For now, at least.

The Verge: The web has a new system for making AI companies pay up

The mission is to keep the web sustainable.

🧠 Mental Health

New York Post: How ChatGPT fueled delusional man who killed mom, himself in posh Conn. town

Stein-Erik Soelberg, 56, had confided his darkest suspicions to OpenAI’s popular bot, which he nicknamed “Bobby,” before the shocking murder-suicide

Ted Radio Hour: Are the Kids Alright?

Being a kid—or raising one—has never been tougher. From AI in classrooms to social media pressures to economic stress, kids are navigating a minefield. This episode digs into AI in education, and student well-being.

BBC: Safety of AI chatbots for children and teens faces US inquiry

The Federal Trade Commission is inquiring into seven tech companies including Snap, Meta, OpenAI and XAI.

The Atlantic: AI Is a Mass-Delusion Event

Three years in, one of AI’s enduring impacts is to make people feel like they’re losing it.

Eli Pariser: Think TikTok is addictive? We haven’t seen anything yet.

Everyone needs to pay attention to the most recent AI products rolled out by OpenAI, Meta, and Google, because they tell us something important about the future of digital media. Together, it signals that we’re entering a new era of hyper-personalized, hyper-addicting media that’s likely to be orders of magnitude more engaging and addictive than TikTok.

🤔 Attitudes on AI

Pew Research Center: How Americans View AI and Its Impact on People and Society

Americans are worried about using AI more in daily life, seeing harm to human creativity and relationships. But they’re open to AI use in weather forecasting, medicine and other data-heavy tasks.

Bloomberg: The AI Doomers Are Losing the Argument

As AI advances and the incentives to release products grow, safety research on superintelligence is playing catch-up.

The New York Times: A.I.’s Prophet of Doom Wants to Shut It All Down

Eliezer Yudkowsky has spent the past 20 years warning A.I. insiders of danger. Now, he’s making his case to the public.

The San Francisco Standard: Tech Bro 2.0: The new Silicon Valley archetype dominating the AI age

He’s not what he used to be. He’s jacked, cracked, and thinks he might save America.

Jay Van Bavel, PhD: Sycophantic AI increases attitude extremity and overconfidence

In a new paper, researchers found that sycophantic hashtag#AI chatbots make people more extreme--operating like an echo chamber. Yet, people prefer sycophantic chatbots and see them as less biased. Only open-minded people prefer disagreeable chatbots

📃 Policy and Regulation

Semafor: Anthropic irks White House with limits on models’ use

The AI company declined to allow requests by contractors working with federal law enforcement.

Deadline: With Hollywood On Edge About AI, TV Academy Establishes Guidelines For Members

A Television Academy task force developing what it calls “responsible AI and production standards” has finalized a set of guidelines for members.

Gary Marcus: AI red lines

We urge governments to reach an international agreement on red lines for AI — ensuring they are operational, with robust enforcement mechanisms — by the end of 2026.

NPR: What does the Google antitrust ruling mean for the future of AI?

A federal judge’s mild ruling in the Justice Department’s suit over Google’s search engine monopoly has critics worried that the tech giant can now monopolize artificial intelligence.

Anna Cook, M.S.: Neglecting accessibility reveals a fundamental misunderstanding of what makes good design.

The American federal government unveiled its “America by Design” initiative through an executive order, and shortly after, launched a website to showcase it.

TechPolicy.Press: How Google Paid the Media Millions to Avoid Regulatory Pressure

Google has signed more than 2,000 contracts with news outlets worldwide in the past five years. A team of journalists reviewed its strategy.

The Wrap: AI ‘Actress’ Tilly Norwood Will Be Signed by an Agency ‘In the Coming Months,’ Her Creator Claims

A computer-generated actress named Tilly Norwood will be signed by an agency “in the coming months,” a claim made at the Zurich Summit by her creator, little-known actress, comedian and digital producer Eline van der Velden.

🌎 Environmental Impact

The New York Times: A.I.’s Environmental Impact Will Threaten Its Own Supply Chain

Spruce Pine, N.C., supplies the world’s highest-purity quartz, a mineral that keeps the A.I. revolution afloat. What are the consequences?

🩺 Medicine

The Guardian: New AI tool can predict a person’s risk of more than 1,000 diseases, say experts

Delphi-2M uses diagnoses, ‘medical events’ and lifestyle factors to create forecasts for next decade and beyond

The New Yorker: If A.I. Can Diagnose Patients, What Are Doctors For?

Large language models are transforming medicine—but the technology comes with side effects.

🎓 Research and Resources

Bethan Jinkinson: BBC and AI Literacy

The BBC launched a new series of four short videos on AI Literacy - with the aim of empowering audiences to use AI smartly and safely. The videos were developed very much with audiences new to Gen AI in mind.

Cornell University: Novel AI Camera Camouflage: Face Cloaking Without Full Disguise

This study demonstrates a novel approach to facial camouflage that combines targeted cosmetic perturbations and alpha transparency layer manipulation to evade modern facial recognition systems.

This is what the CIA is using the LLMs for. Decomposition Torture

Voice to Skull Tech: Which is Microwaves vibrating people’s inner ear to pitch voices into peoples skulls.

Neural Linguistic Programming: They call it psuedo science until its paired with Voice to Skull and used for torture.

Biderman’s Chart of Coercion. Which is every online app. Has a couple variables in play. Also all BDSM including Trans fetishes.

CIA is torturing and recruiting people with Sigil based torture. A sigil is a symbol but its also a graphic. When CNN throws to a commercial they beam you out with a Sigil. 24 hour news channels having busy screens to keep you engaged longer would be considered a Sigil.

They turned cars into Sigils. If you drove in California anytime after 2015 you would notice crazy traffic.

The full system is Trans fetish symbols are the same as fast food dining symbols. Bows on Wendy’s and KFC. Stars for Carl’s Jr. Clowns for Jack in the box and Mcdonald’s. Think the Joker movie and that shooting up the Batman movie premiere with colored hair.

The crazy part is if you need to confirm. Straight or Trans, scenes with red heads are loaded with all the CIA symbols. Then the CIA can send a red headed woman at you in real life with a satanic shirt as part of a sequence for a sigil.

Abstract Narcissistic abuse: Twitter showing you something and then taking it away forever is abstract narcissistic abuse. To keep you engaged longer. Thats one example Ive personally seen them experiment with.

That photo is in Santa Cruz, CA where they target runaway skater kids. I can show you what the CIA is doing. They can run decomposition torture until you are blackmailed or dead.

Thanks for writing this, it clearly highlights the incredible breadth of the ETP Fellows' work. With such diverese specializations, how is the proprietary curriclum adapted to keep the focus on core ethical principles?